OpenAI dropped details on their o3 reasoning model last week, and the benchmark results are absurd. We’re talking near-human performance on complex reasoning tasks, coding challenges that stump senior developers, and math problems that would make most PhDs sweat.

Cool story. Except you can’t actually use it yet. Limited research preview only. So while the tech press loses their minds over what’s coming, let’s talk about what you can actually do right now with the AI that’s available today.

Better prompts. Better AI output.

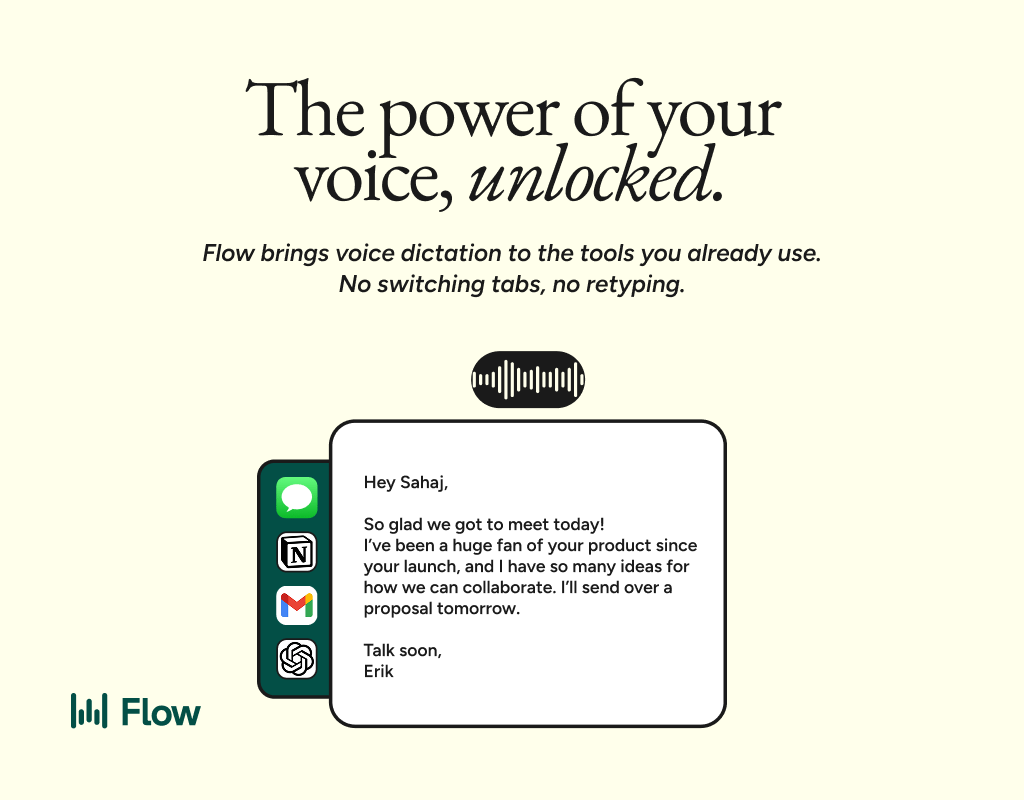

AI gets smarter when your input is complete. Wispr Flow helps you think out loud and capture full context by voice, then turns that speech into a clean, structured prompt you can paste into ChatGPT, Claude, or any assistant. No more chopping up thoughts into typed paragraphs. Preserve constraints, examples, edge cases, and tone by speaking them once. The result is faster iteration, more precise outputs, and less time re-prompting. Try Wispr Flow for AI or see a 30-second demo.

THE THREE THINGS THAT ACTUALLY MATTER

1. REASONING MODELS ARE HERE (JUST NOT FROM OPENAI)

While everyone waits for o3, Claude Sonnet 4.5 is shipping with extended thinking right now. I’ve been using it for two weeks. It’s solving complex business problems that required human analysts a month ago.

Example: I fed it three months of sales data, customer feedback, and market research. Asked it to identify why conversion rates dropped in Q4 and suggest fixes. It spent 90 seconds thinking, then delivered an analysis that matched what our $15K consultant recommended. Except Claude cost me $4 in API credits.

The gap between “announced” and “available” matters. o3 might be better on paper. Claude Sonnet 4.5 is better in reality because I can actually use it.

2. THE MODEL WARS ARE MAKING EVERYTHING CHEAPER

OpenAI, Anthropic, and Google are in an arms race. That’s great news for anyone actually using this stuff to run a business. Prices are dropping while capabilities are rising.

Six months ago, running complex AI workflows cost serious money. Today? I’m processing hundreds of documents, generating thousands of words of content, and analyzing massive datasets for less than the cost of one VA working part-time.

The smart play isn’t picking sides. It’s using whichever model is best for each specific task. Galaxy.ai makes this stupid easy by giving you access to all major models through one interface.

3. VIDEO AI IS FINALLY GETTING GOOD

The latest updates to tools like HeyGen and Runway are legitimately impressive. We’re past the uncanny valley stage. The AI-generated videos actually look professional now.

I’m testing HeyGen for client presentations. Upload a script, pick an avatar, generate a video. Takes about 10 minutes. Quality is good enough that clients can’t tell it’s AI unless I tell them.

For anyone doing sales outreach, educational content, or social media marketing, this changes the game. You can now produce video content at a scale that was previously impossible six months ago.

WHAT THIS MEANS FOR YOUR AI STACK

The AI landscape is moving faster than most people can keep up with. New models drop weekly. Features that were science fiction three months ago are production-ready today.

Here’s my take after watching this space obsessively for two years: don’t chase the latest model. Build systems that can adapt. Use tools that let you swap models without rebuilding everything.

The companies winning right now aren’t the ones using the “best” AI. They’re the ones using AI consistently across their operations. They’ve built workflows that work regardless of which specific model is running under the hood.

TACTICAL TAKEAWAY #1: BUILD A MODEL-AGNOSTIC RESEARCH SYSTEM

Here’s how to stop caring which model is “best”:

1. Use Make.com to build your workflow

2. Connect to Galaxy.ai for multi-model access

3. Set up a simple router: speed-critical tasks go to the fastest model, cost-sensitive tasks go to the cheapest, quality-critical tasks go to the most capable

4. Add a fallback: if one model fails or is slow, automatically route to the next option

Example workflow: Customer question comes in, Galaxy routes to GPT-4o for speed, if response time exceeds 5 seconds it switches to Claude, if Claude is overloaded it falls back to Gemini 3.0. You get an answer either way, and you’re always using the optimal model for current conditions.

THE INTEGRATION PROBLEM (AND HOW TO FIX IT)

The biggest issue isn’t which AI model to use. It’s getting AI to actually work with your existing tools and workflows. Most people get stuck here.

You’ve got data in Google Sheets, communication in Slack, customer info in a CRM, projects in Asana or Notion. Making AI useful means connecting it to all of that. Otherwise it’s just a fancy chatbot.

Solution: Make.com is the bridge. It connects to pretty much everything and lets you route data through AI models without writing code. I’ve built entire business operations this way.

TACTICAL TAKEAWAY #2: THE 5-WORKFLOW SYSTEM EVERY BUSINESS NEEDS

Build these five AI workflows this week:

1. CUSTOMER INTAKE: Form submission triggers AI to research the company, draft personalized response, create CRM record, schedule follow-up tasks.

2. CONTENT REPURPOSING: New blog post triggers AI to create social media versions, email newsletter, video script, and LinkedIn article. All automatically published on schedule.

3. DATA ANALYSIS: Weekly export from your systems goes to AI for pattern analysis, anomaly detection, and summary report delivered to your inbox every Monday.

4. MEETING PREP: Calendar event triggers AI to research attendees, pull relevant history, draft agenda, compile briefing document.

5. QUALITY CONTROL: Before anything customer-facing goes out, AI checks for tone, clarity, brand consistency, and potential issues.

These five workflows eliminate probably 20 hours of manual work per week. That’s not an exaggeration. That’s what I’m seeing across multiple businesses.

THE REAL STORY NOBODY’S TELLING

Everyone’s focused on which company has the best model. That’s the wrong question. The right question is: how do I use whatever’s available right now to solve actual problems?

o3 will eventually be available. It’ll probably be amazing. And then six months later, something better will come along. This cycle never stops.

The people making money aren’t waiting for the perfect model. They’re building with what works today and swapping in better models when they become available. The infrastructure matters more than the model.

Want the exact multi-model workflows I’m running? The AI WORKFLOW BLUEPRINT includes complete Make.com scenarios, decision trees for which model to use when, and prompt templates optimized for each platform.

👉 COMMENT “BLUEPRINT” and I’ll send you the details.

WHAT TO DO RIGHT NOW

Stop waiting for the next big model release. Start building with what’s available. Focus on systems that can adapt as new models drop.

The AI landscape is moving too fast for loyalty. Stay flexible. Stay testing. Stay building.

Jordan Hale

The AI Newsroom

Seattle, WA

Real AI. Real Results. No Hype.